Temporal Consistency Learning of Inter-Frames for Video Super-Resolution

Meiqin Liu1, Shuo Jin1, Chao Yao2, Chunyu Lin1, Yao Zhao1

1 Beijing Jiaotong University, Beijing, China

2 University of Science and Technology Beijng, Beijing, China

Abstract

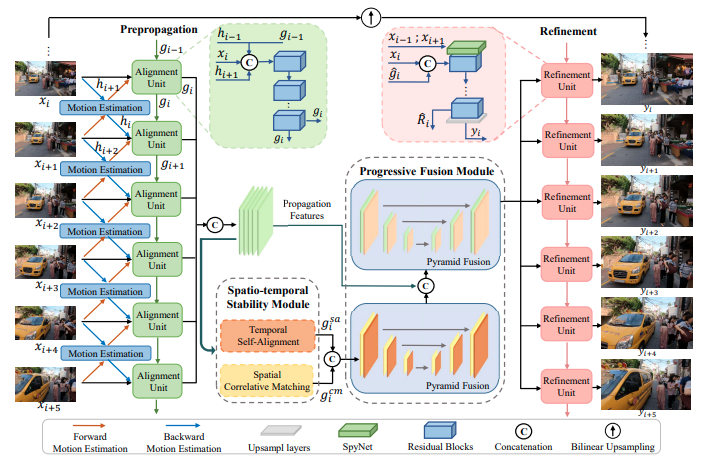

Video super-resolution (VSR) is a task that aims to reconstruct high-resolution (HR) frames from the low-resolution (LR) reference frame and multiple neighboring frames. The vital operation is to utilize the relative misaligned frames for the current frame reconstruction and preserve the consistency of the results. Existing methods generally explore information propagation and frame alignment to improve the performance of VSR. However, few studies focus on the temporal consistency of inter-frames. In this paper, we propose a Temporal Consistency learning Network (TCNet) for VSR in an end-toend manner, to enhance the consistency of the reconstructed videos. A spatio-temporal stability module is designed to learn the self-alignment from inter-frames. Especially, the correlative matching is employed to exploit the spatial dependency from each frame to maintain structural stability. Moreover, a self-attention mechanism is utilized to learn the temporal correspondence to implement an adaptive warping operation for temporal consistency among multi-frames. Besides, a hybrid recurrent architecture is designed to leverage short-term and long-term information. We further present a progressive fusion module to perform a multistage fusion of spatio-temporal features. And the final reconstructed frames are refined by these fused features. Objective and subjective results of various experiments demonstrate that TCNet has superior performance on different benchmark datasets, compared to several state-of-the-art methods.

Pipeline

Highlights

-

We introduce a temporal consistency learning strategy that is designed for the alignment of temporal information from inter-frames. The global motion tendency is learned across multiple frames to enhance the spatio-temporal stability.

-

We redesign a hybrid recurrent architecture for information propagation. It jointly takes advantages of slidingwindow and recurrent network, which is able to exploit the short-term and long-term information.

-

We adopt a progressive fusion module based on pyramid structure for efficiently aggregating the consistent features. The final restored frames are refined with these fused features.

Results

Materials

Citation

@article{liu2022temporal,

title={Temporal Consistency Learning of Inter-Frames for Video Super-Resolution},

author={Liu, Meiqin and Jin, Shuo and Yao, Chao and Lin, Chunyu and Zhao, Yao},

journal={IEEE Transactions on Circuits and Systems for Video Technology},

volume={33},

number={4},

pages={1507--1520},

year={2022},

publisher={IEEE}

}

Contact

If you have any questions, please contact Chao Yao at yaochao@ustb.edu.cn